Download Cisco ASA image using the following link, this image contains a pre-activated VPN plus license. $ qemu-img convert -O qcow2 EvergreentrunkSqueeze-disk1.vmdk EvergreentrunkSqueeze.qcow2 Contents of OVF Files The OVA is intended to run an Appliance and this OVF file describes the appliance. Examine the contents of this file to determine information about the expected CPU, Memory and other appliance details.

For Network, Security and DevOps

Professionals

For Network, Security and DevOps

Professionals

For Network, Security and DevOps

Professionals

For Network, Security and DevOps

Professionals

For Network, Security and DevOps

Professionals

EVE-NG Professional Edition:

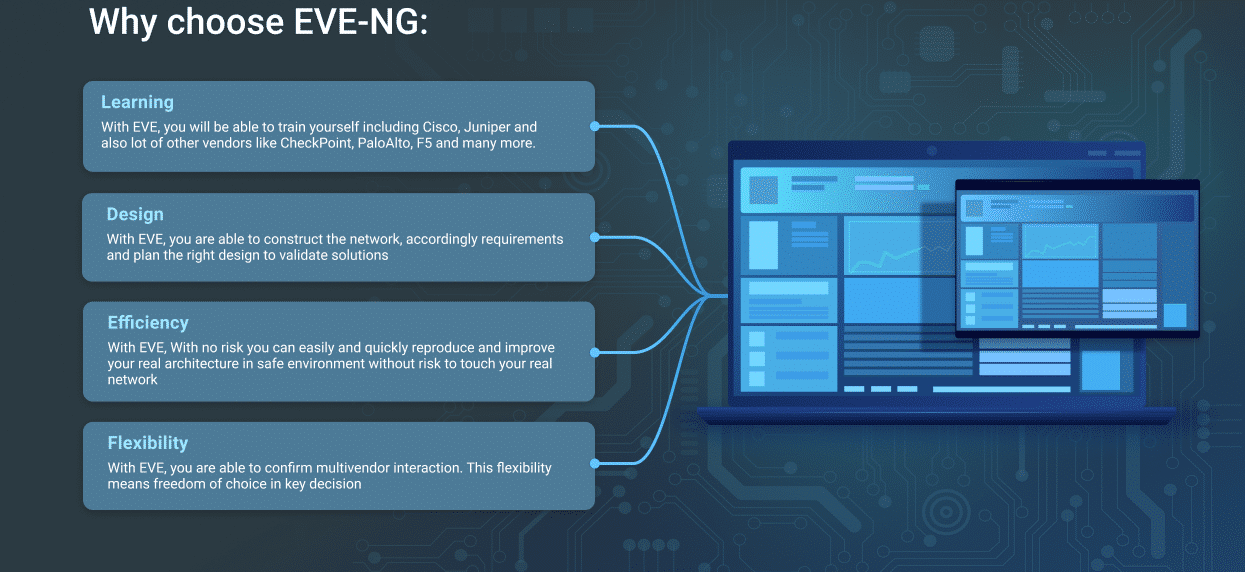

EVE-NG PRO platform is ready for today’s IT-world requirements. It allows enterprises, e-learning providers/centers, individuals and group collaborators to create virtual proof of concepts, solutions and training environments.

EVE-NG PRO is the first clientless multivendor network emulation software that empowers network and security professionals with huge opportunities in the networking world. Clientless management options will allow EVE-NG PRO to be as the best choice for Enterprise engineers without influence of corporate security policies as it can be run in a completely isolated environment.

| EVE Professional Edition: 3.0.1-16 (25 June, 2020) Release notes link |

|---|

| Upgrade notes: If you are upgrading your EVE PRO or EVE LC form Versions 2.0.6.52 or earlier, please follow the link below: Upgrade is NOT supported for cloud EVE versions like Google Cloud. Please install new fresh EVE Pro 3.0 cloud VM. 1. Make your EVE-NG backup: 2. Follow install instructions below: |

| EVE-NG Professional/Learning Center Cookbook |

|---|

| EVE-NG PRO/LC Cookbook version 3.1 (25 June, 2020) Download link EVE PRO/LC Cookbook Section updates: 3.1.1 VMware workstation EVE VM installation using ISO image |

Some Features:EVE brings You the power You need to mastering your network within multivendor environment designing and testing.

|

EVE-NG-PRO Emulation Software

|

Here’s a tiny backstory: Looking back into the past five to six years, homelabbing has been one of greatest pleasures to fiddle around and come up with the right setup for whatever I’m learning. I’ve went from VMs on my laptop to using XEN on some old machine to VMware ESXi. ESXi is great, but with time, I kept finding myself doing a lot of the same thing over and over, and although I automated most parts that are repetitive, I felt like I wanted something a bit quicker that just works.

For around two years, oVirt has been my choice for hosting my run-of-the-mill – mostly linux – labs. It works well with images, it can be automated, and it does nested virtualization very, very well. Nested Virtualization and speed to bring VMs up were my two absolutely favorite parts, especially as I started working on Contrail and Openstack. I still happily run oVirt today for everything, except Networking Labs. That’s the spot I realized I needed something fresh that tackles the problem of network devices labs in a smarter way.

Enter EVE-NG

My problem began when I needed to run labs containing network devices (Juniper vMX, vSRX, Cumulus, VyOS, etc). Unfortunately, creating such labs over oVirt or even nested over KVM within a linux host can be very tricky and time consuming with lots of moving parts that can easily break it. It just didn’t make sense to me that running a back-to-back connection between two devices meant you’d create a bridge and link both VMs on it. This is also how ESXi does it apparently, and it is a very tedious process that I don’t think I’d want to go through when I want to quickly spin something up to try it. That’s when EVE-NG came into the picture.

Emulated Virtual Environment – Next Generation (EVE-NG) simplifies the process of running labs containing network devices, and the way to interconnect them with other virtual nodes. It solves the missing piece of making such labs easier to build, and it does it in an intuitive way.

EVE-NG supports a whole bunch of disk images to be used when building labs. You have Linux (of course), and you have an increasing list of supported network virtual devices including Juniper, Cisco, F5, and many others. You can check the full list here. The User Interface of EVE-NG makes it so that the topology you see does actually work. You can place the devices, Drag-and-Drop cables to establish connectivity between the devices and select the wanted ports, re-organize and label things, it just does it in a good presentable way that also allows a speedy lab building process. There are many other features that you should definitely check out on their website

EVE-NG Deployment Options

EVE-NG can be deployed on Bare Metal, as a VM over VMware (ESXi/Workstation/Fusion), or as VM over GCP. I would highly recommend sticking with officially supported deployment options to ensure that you’re getting the best possible performance, as well as support over the community chat.

From my personal experience: Go for Bare Metal installation. It provides the best possible performance for the hosted VMs. While you can deploy it over some other platforms (I tested EVE-NG Nested over oVirt), it won’t work as good. Just to give you an example: Booting vMX 17.4R1 over Nested EVE-NG over KVM used to take me ~50mins until everything is up. On Bare, it takes roughly 7 minutes from booting to FPC becoming online! Oh and it gets better: vQFX starts in less than 3 minutes for me! That’s huge.

Community VS PRO Edition

There are two flavors of EVE-NG: Community and PRO. Both share the same list of supported images, but the differences are mostly about features. While I believe Community edition will be suitable for most people – I’ve used it for a while -, the reason I went with PRO edition is literally one feature: Hot Linking. Hot linking allows you to interconnect nodes while running, without needing to shut the nodes down, modify the connectivity, then start again. Want to connect eth0 or a linux box to xe-0/0/0 on vQFX? 4 clicks and you’re done. Convenience, and time saving. Some other PRO features like Integrated WireShark and ability to run Containers in Labs are good to have, but it depends on what you plan to run. In a nutshell, go for a test of Community edition, then switch to PRO if device linking becomes irritating.

Before You Begin

Let’s say by this point you decided to deploy EVE-NG. The official documentation covers each deployment scenario and what you need to do for it. As I recommend to go with bare install, make sure you’re using Ubuntu 16.04 LTS for it as its the supported release for now.

One thing I would recommend to do after you install eve is to also install client side supporting packages for your operating system. When accessing your labs, you can select to either login via HTML5 Console or Native Console. HTML5 console opens a tiny popup within the Lab View which you can use to interact with the device. Native Console opens a console connection via your terminal application (iTerm, SecureCRT, etc). Installing the client side packages help make that work, and they contain other additions as well.

Your First Lab: Getting Images

Alright, you made it this far!

The first step for you to be able to start labbing is of course, getting the images of devices you plan to use. While EVE-NG can theoretically support a lot of virtual editions of network and system devices (thanks to the community for sharing their experiences!), it would be recommended to stick to the officially supported images, at least at the beginning until you are comfortable enough with the platform. Also, note that the setup for each virtual node must follow some specific disk images naming convention detailed here.

In the following examples, I’ll highlight the procedures for Juniper vQFX, vMX, vSRX3.0, and Linux.

Juniper vQFX

Start by obtaining the vQFX images from Juniper Downloads website. For vQFX, we need to download two images: PFE Disk Image (QCOW) as well ask RE Disk Image (IMG). For this test, I’ll use 18.4R2-S2.3.

After downloading both images to your server, you will end up with two files:

- PFE: cosim-18.4R1.8_20180212.qcow2

- RE: jinstall-vqfx-10-f-18.4R2-S2.3.img

We will start by creating the directories where those images will be hosted:

Next, move the downloaded images to their respective directories. Keep in mind that you MUST follow the naming convention discussed above or else your device will not be able to boot:

Last but not least, there’s a script that must be executed whenever making changes in images or template, to make sure that proper permissions are set on the files:

That’s it! If all goes well, you should be able to boot your first vQFX instance from EVE-NG UI:

Add the nodes (PFE and RE): Right Click => Nodes:

Add connectivity between RE and PFE (em1 / int) using Drag-and-Drop:

Start Nodes (Right Click => Start), and check Console (Click Node). Here I’m using HTML5 Console:

Juniper vMX

vMX works in pretty much the same way as vQFX (two nodes), but it consists of multiple disks. Also the download size is probably the biggest you would see. Grab the bundle tagged as KVM from Juniper website, likely to be named along the lines of vmx-bundle-19.4R1.10.tgz.

First, extract the tarball:

Next, create the image directories:

We’ll start with VCP, copy the following three files to the VCP folder:

For VFP, only one file needs to be copied:

Fix the permissions:

Juniper vSRX3.0

The cool thing about vSRX3.0 is that it’s a single image, so grab the qcow2 images for your desired release from Juniper Downloads, which should be named something like junos-vsrx3-x86-64-20.1R1.11.qcow2. Make the directory for it, place the images and fix the permissions:

Here’s what my directory structure looks like:

Note on Multiple Releases

As you have probably noticed, the directory naming under /opt/unetlab/addons/qemu/ contains a prefix for the device name, disk type (RE, PFE) if needed, and the release. You can download multiple releases of the device and place them in their respective folders, then from the WebUI, when booting the node you will have the ability to select which release you would like to work with:

What about Linux?

The method I suggest to use when deploying linux boxes inside of EVE-NG is to create your own disk image that is re-usable as many times as you want. Yes you can run the installation of a linux box using a CD image every time, but remember our goal here: Build Labs Fast.

Here are the procedures for deploying and creating a CentOS 7 image:

Start off by downloading the installation media and placing it within the image folder for the linux host. We will use it for the first time only:

Create the disk image for the linux host. Here I will specify the size as 100G, but it will be a thin provisioned disk (will not allocate 100G from the disk immediately):

Great. You can now start installing Linux. I would recommend to connect the node to Cloud0 network to allow it to have internet connectivity, if you would like to have some certain packages prepared when booting later from the disk. I also recommend creating a new Lab for this process, which will simplify a step needing the Lab ID done after the installation.

Once your typical Linux installation is completed, shutdown the node, and delete or move the cdrom.iso file out of image directory

Start the same node again, and make sure that the OS is working fine. You can configure or install any additional packages on the server at this stage. Once you’re done and happy with how it is, shutdown the node again.

Now’s the important bit. You need to commit the changes done on the disk to the disk of this Linux image. To start, click on the Lab Details button on the side bar, and copy the ID:

Navigate to that lab temp directory under /opt/unetlab/tmp/0/LAB-ID/1/. For example: de1b35c8-8226-46d3-8c6a-944d4b92fddf

See Full List On Eve-ng.net

there will be an hda.qcow2 file there. run the following command:

As always, remember to fix the permissions:

Eve-ng-pro Qemu Images List

That’s it. You can create new linux nodes now using that template, and customize the specs as well. Let the fun begin.

What’s Next?

This part is best kept to your imagination. I discussed with a few friends who use EVE-NG before working on this post, just to get a feeling of what they are working on. The answers varied from JNCIE/CCIE preparations to fabric automation to SDN and cloud deployments. Share your thoughts! Here’s one lab I’m working on right now to do fabric provisioning using Contrail:

Enjoy!